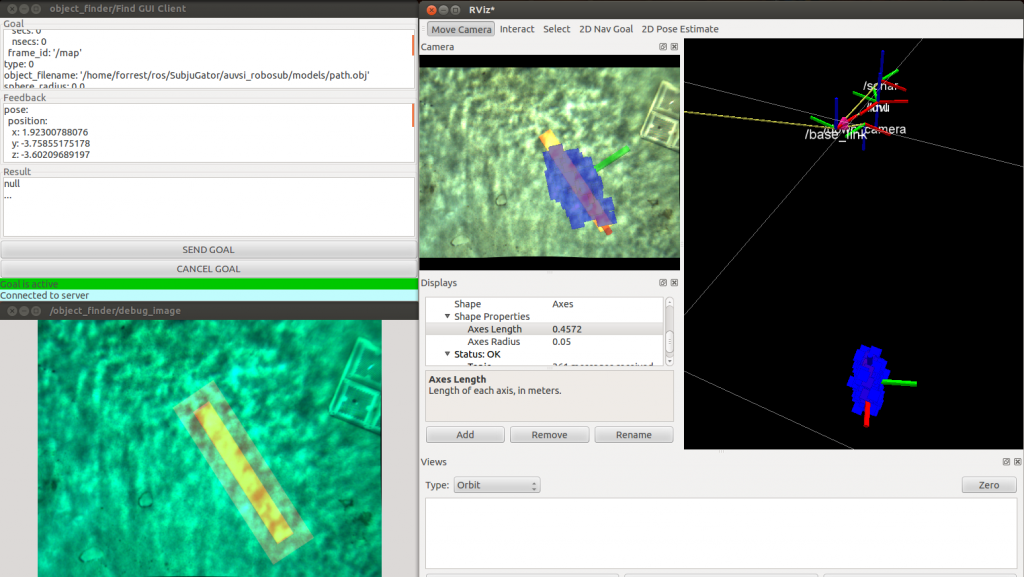

This year, we’re trying to approach vision a different way. In the past, we’ve done image segmentation by using various thresholding algorithms and then classified the resulting contours based on size and shape. Our experimental software instead makes numerous guesses of the pose of the object being searched for, and then ranks them by how likely they are to be true, given the images received from the camera (Particle Filtering). The output quickly converges to the true 3D pose of the object, even though only monocular inputs are being used!

We hope that this work will yield a more robust solution for locating the objects used underwater in the AUVSI RoboSub competition. The probability model that the particle filter uses doesn’t look for edges or contours, but instead works directly on image data, which hints at it being more robust against saturation and other artifacts that commonly impede edge detection. In addition, the particle filter has memory, so resulting estimates can be more accurate than could be determined from any single frame. Also, its memory should let us handle transient interference, such as bubbles or particulate, without resorting to manual filtering of the output.

In the future, we plan to integrate the output of our imaging sonar into pose estimates. Our particle filter provides a very clean framework for fusing multiple sources of data, so we anticipate that this will go well. We expect that utilizing the sonar will primarily speed up initial acquisition of the object.